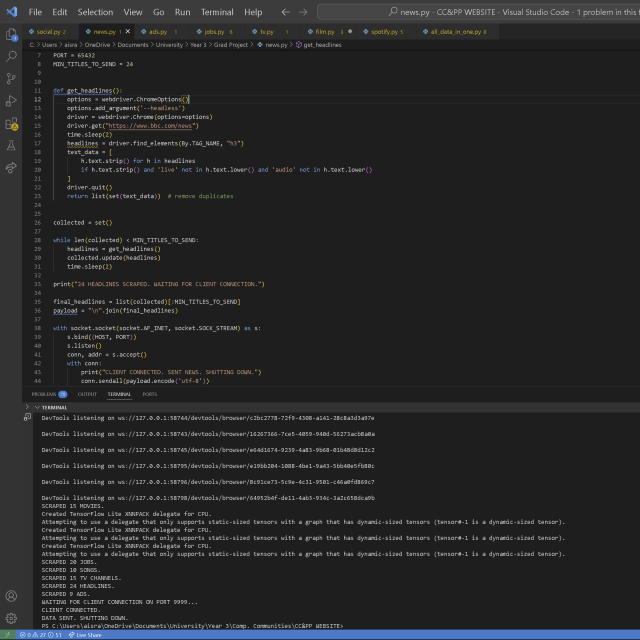

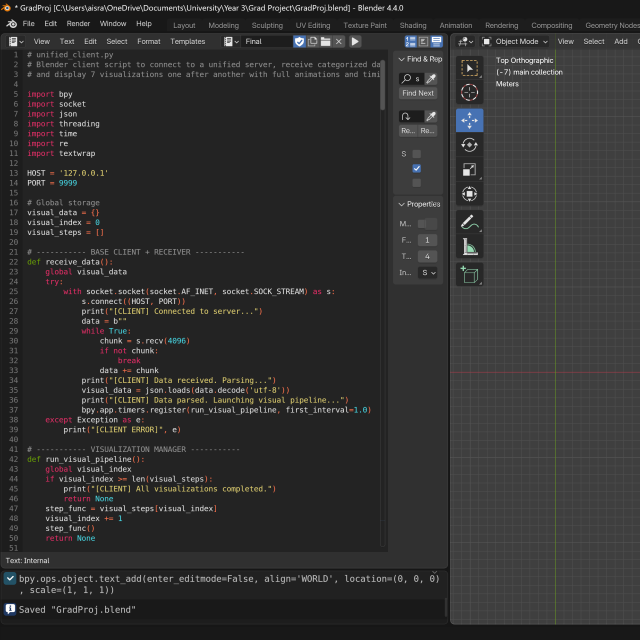

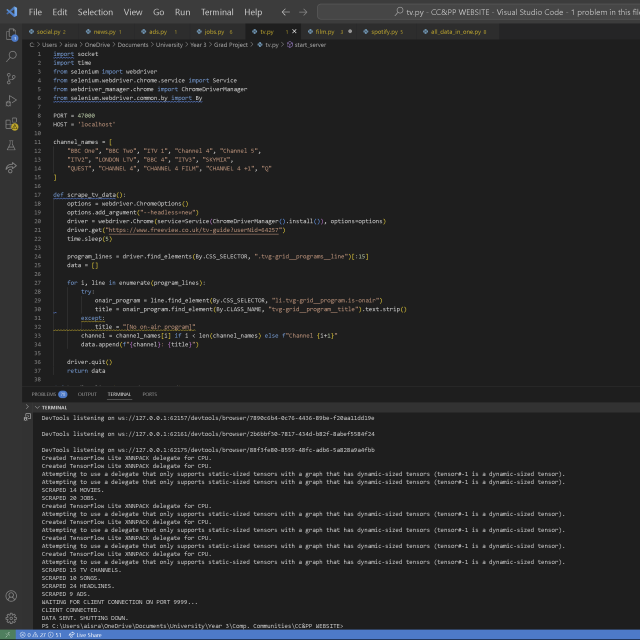

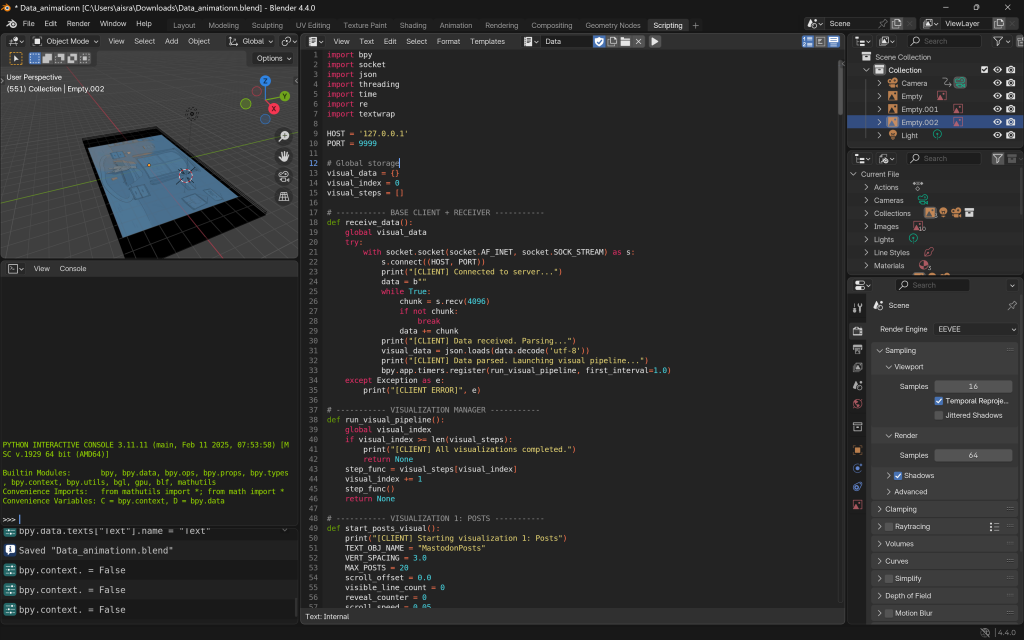

I first spent extensive time attempting to combine the server and client side scripts respectively, yet retain the core functionality, animation wise, of each individual data, like news media, social media, etc.

This was quite difficult, but eventually manageable, and I managed to get my visualisations back.

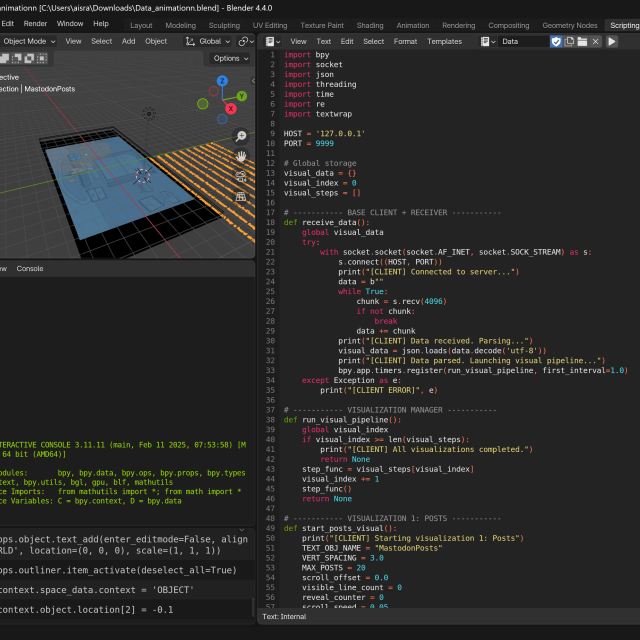

I made sure the server printed to confirm it had scraped, sent, and shut down.

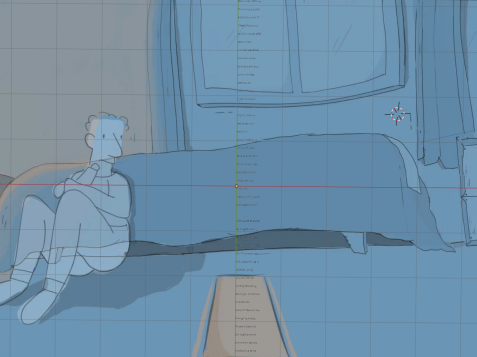

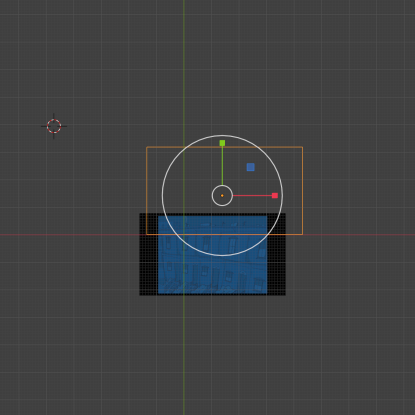

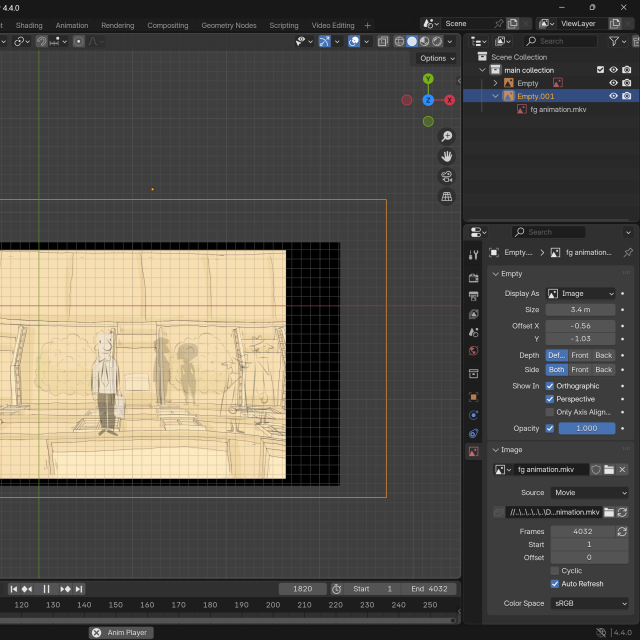

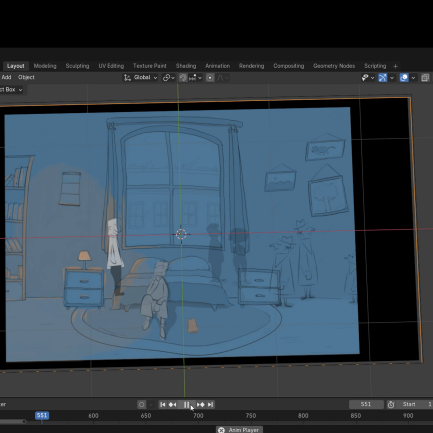

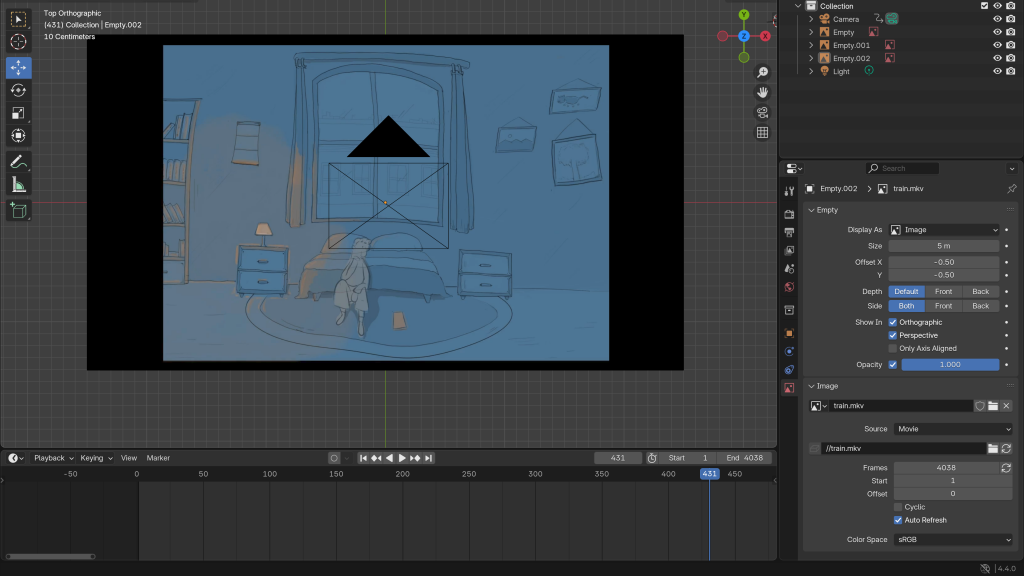

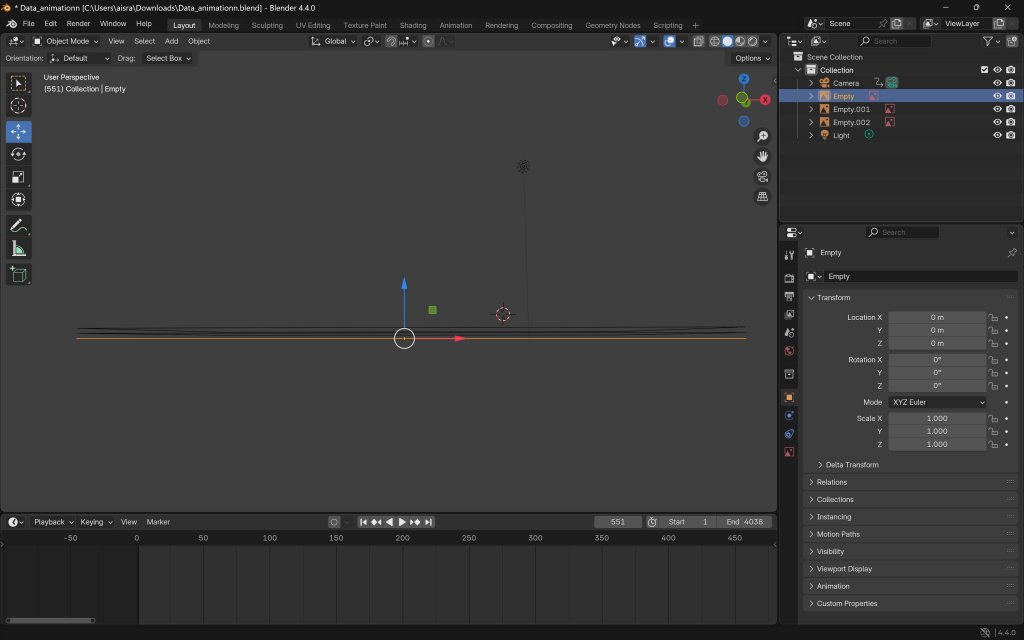

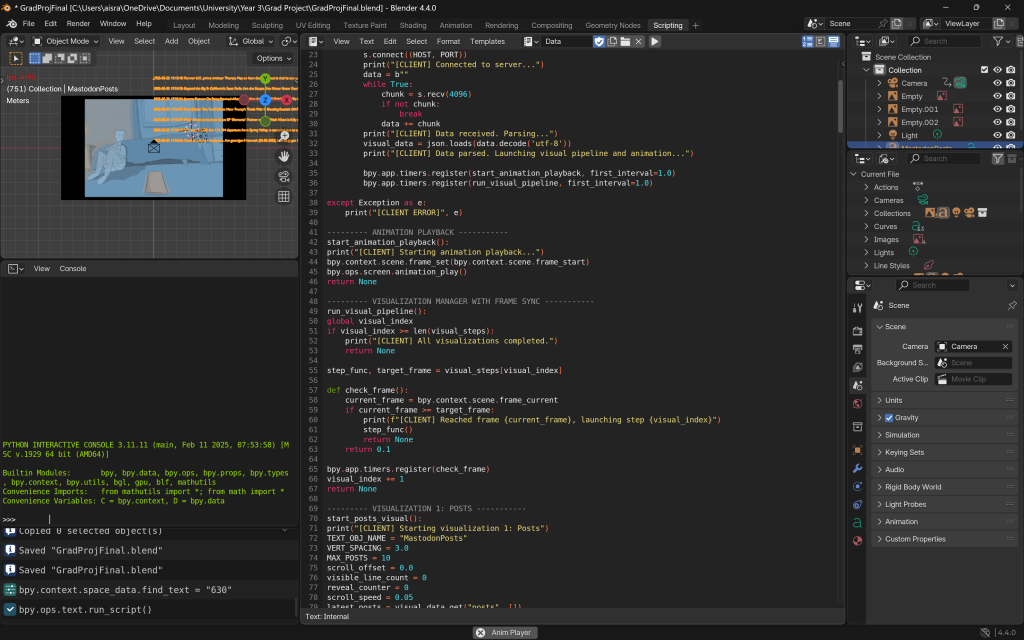

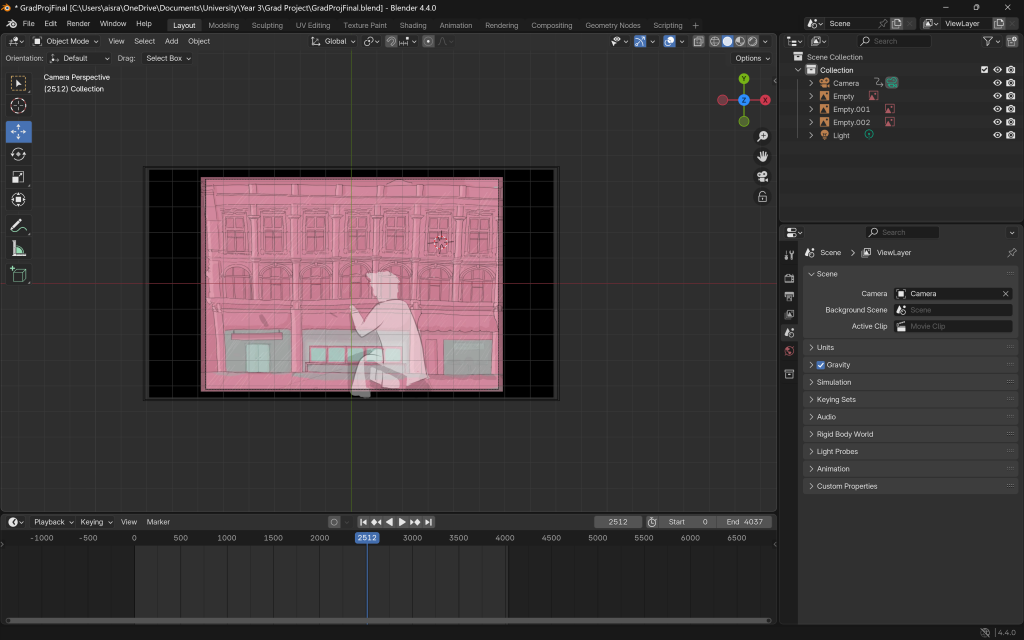

I then brought in the animation video files of which there were 3.

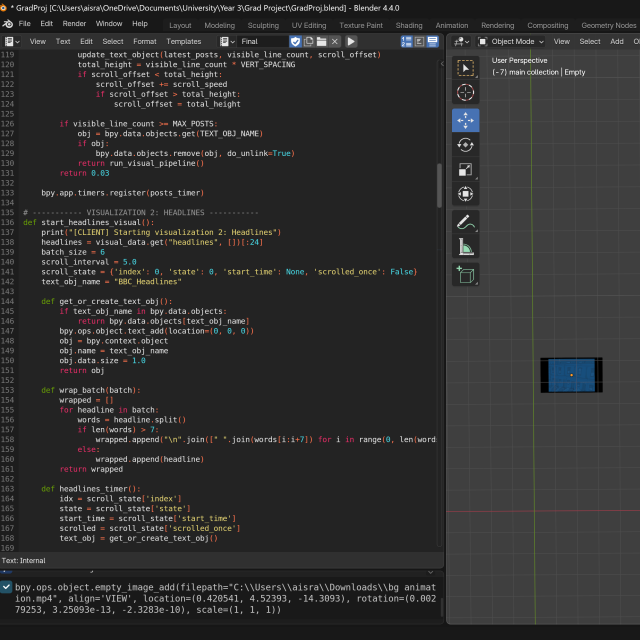

There was much trouble in syncing all of them as several kept overlapping nd causing glitches and issues.

Then, once this was resolved, camera movements were added so viewers could see the data visualised clearer.

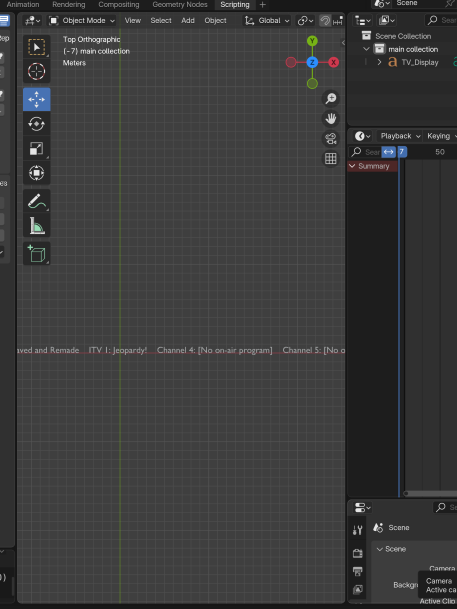

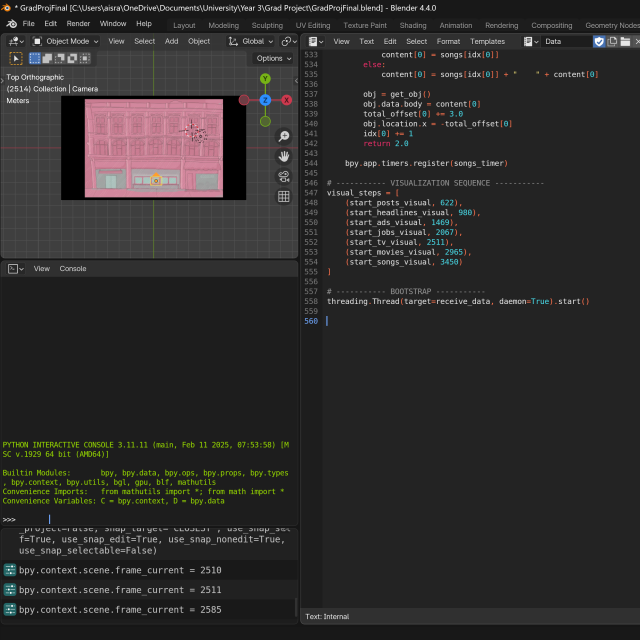

The video planes were thoroughly layered and checked for any mistakes, and I added the functionality of the animation playback being able to be played from the scripting tab

I added timers and checks to ensure the timing of each data and ensured on data would be cleared before the next one started to be visualised.

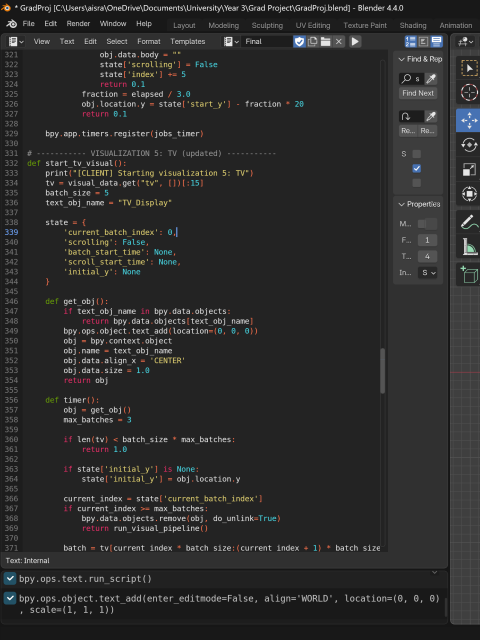

I then spent time in positioning the data in the correct place of the animation.

I noticed a few glitches and flaws, but this was dependant on my framerate – often, my connection was not good enough to tell if i was getting a smooth playback or not.

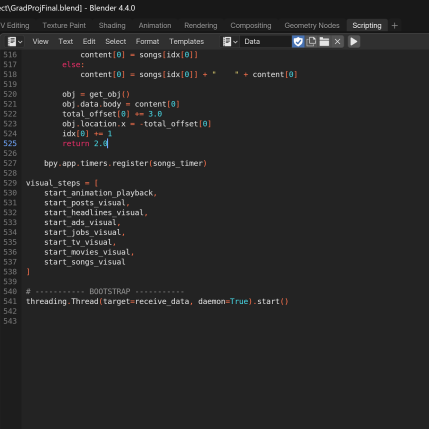

I then specified on which frame of the animation each visualisation should start. This took significant time and effort to figure out how to do.

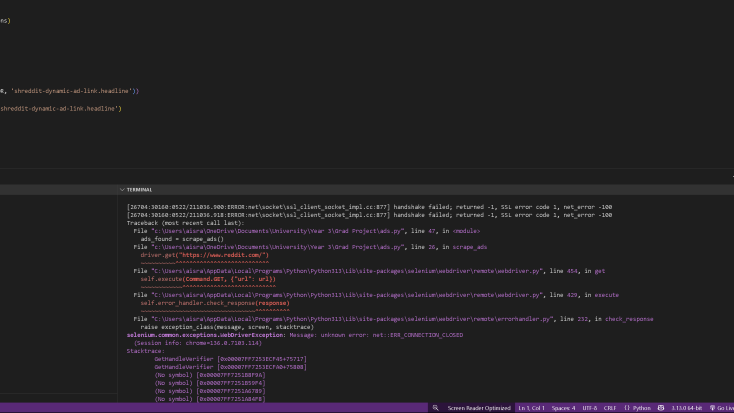

At times, reddit ads especially wouldn’t scrape. I added functionality so that if one did not scrape, it would just give up and wait to send the client what it had after a while.

Then the data was thoroughly animated in the correct place, pace and way, which took many iterations and fiddling around.