While I first tried to scrape Meta Ads through their library, I was unsuccessful as the process was difficult with a lot of obstacles.

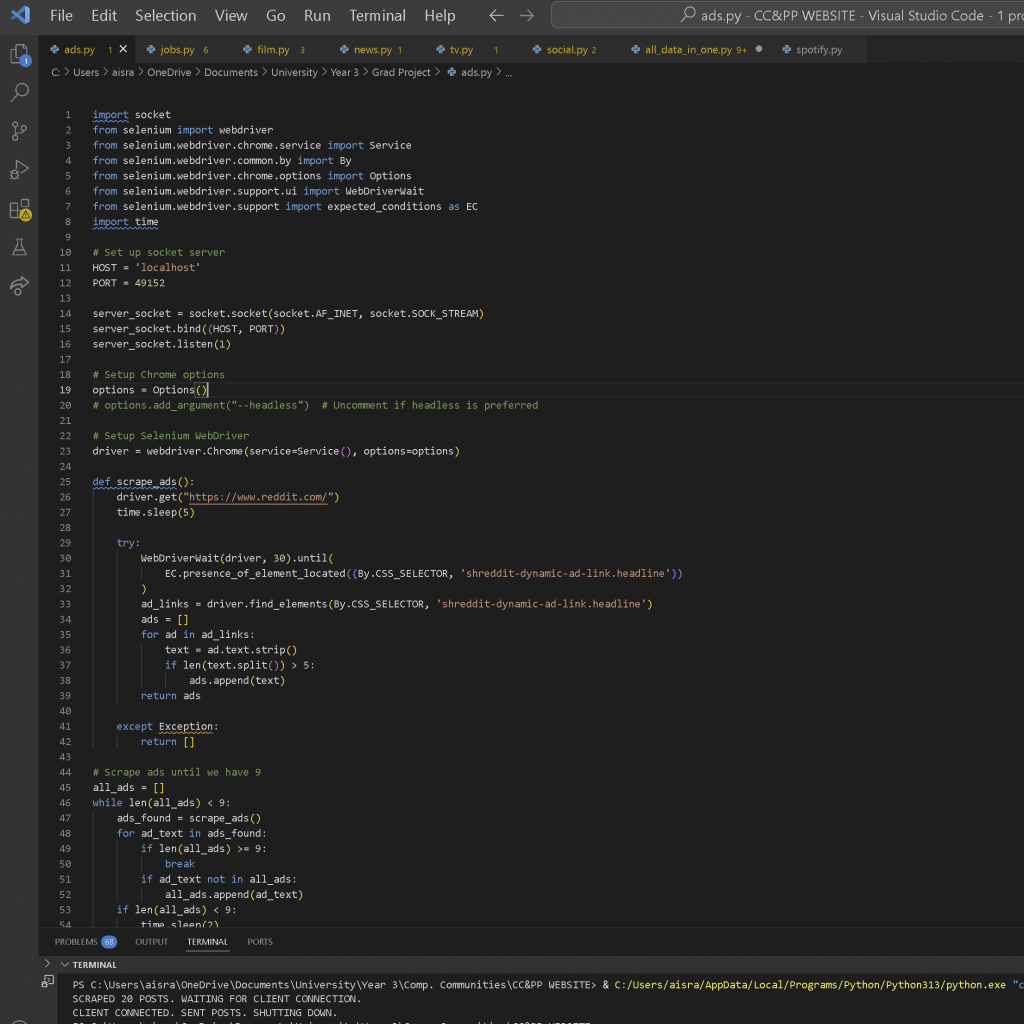

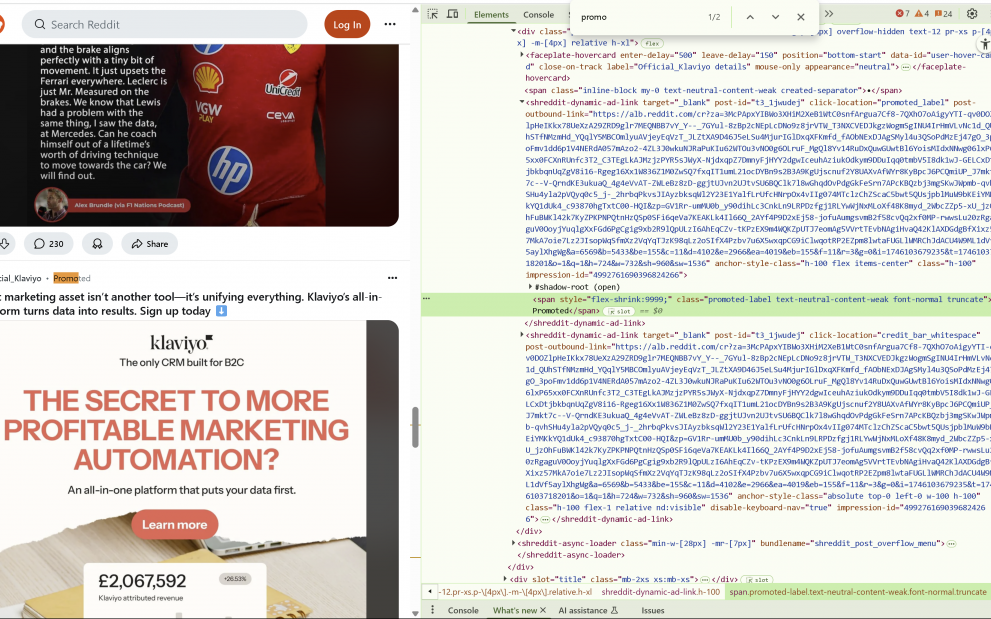

So, I started specifically scraping those posts which are under the promoted label on Reddit. I started off fairly simple, looking through the Inspect tab and finding how the site was structured, and what labels the ‘Promoted‘ class came under. It took a while and a few tries, for example, at first, I was unaware that the backslash in .xs\:mb-xs is needed to escape the colon in CSS selectors, and a few other minor oversights. But once these were fixed, I was able to at least get some result from the scraping.

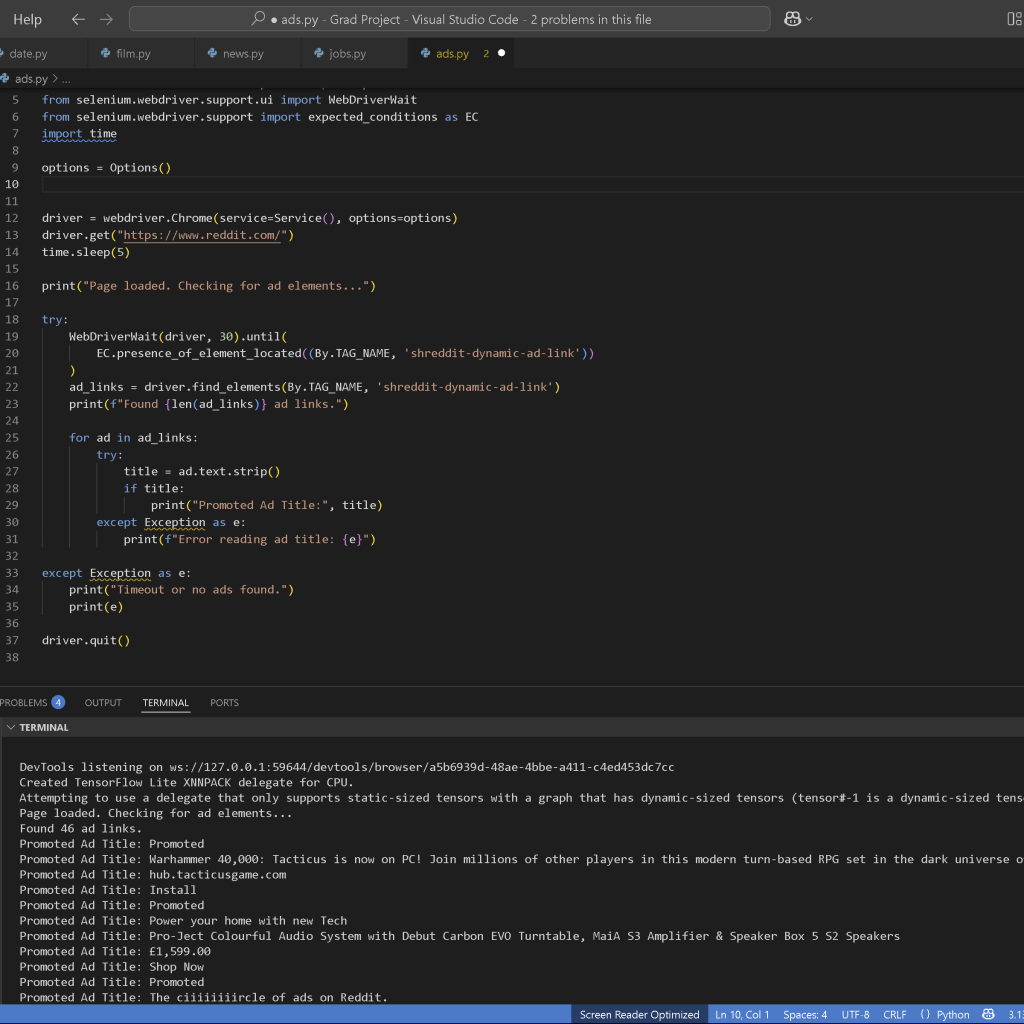

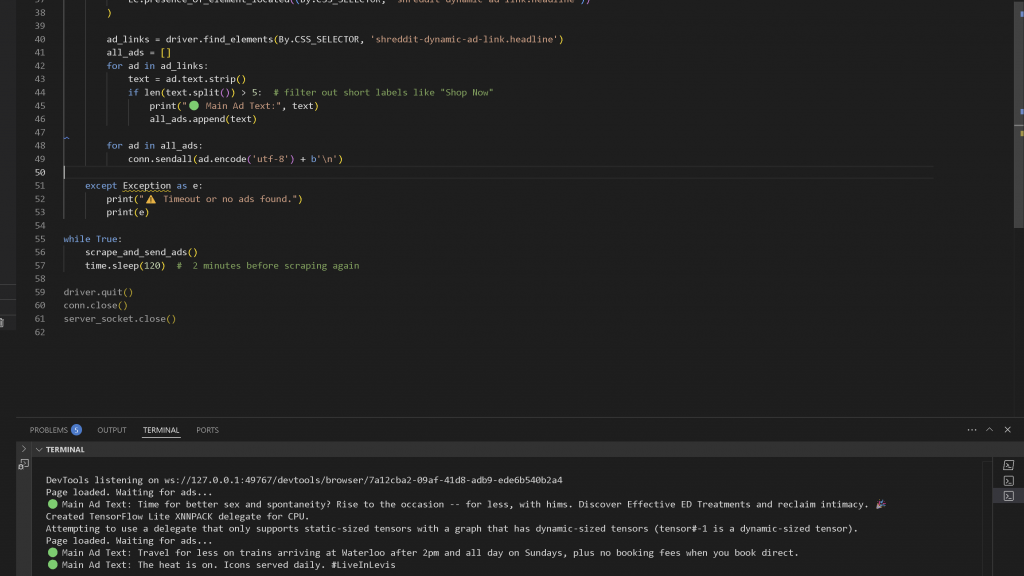

With this, I created a basic scraping code, which scraped all under the <shreddit-dynamic-ad-link>. This had some issues, as everything under this was pretty broad and not really something which could show up, and therefore be visualised, on a tube advert. I also started hitting a timeout, and some searches on ChatGPT revealed that 'shreddit-dynamic-ad-link elements are taking longer to load, or Reddit is blocking/obfuscating content for headless browsers’.

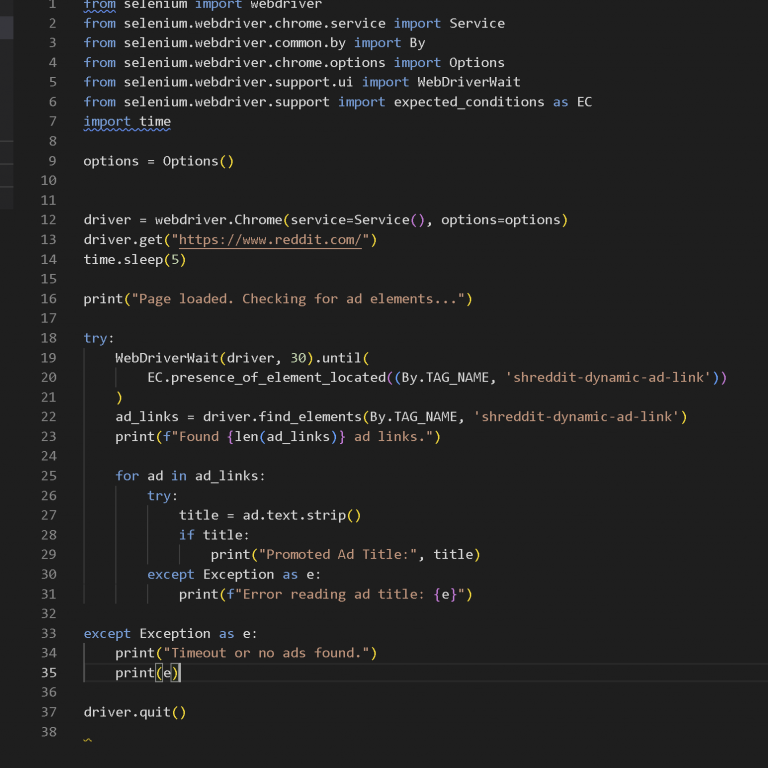

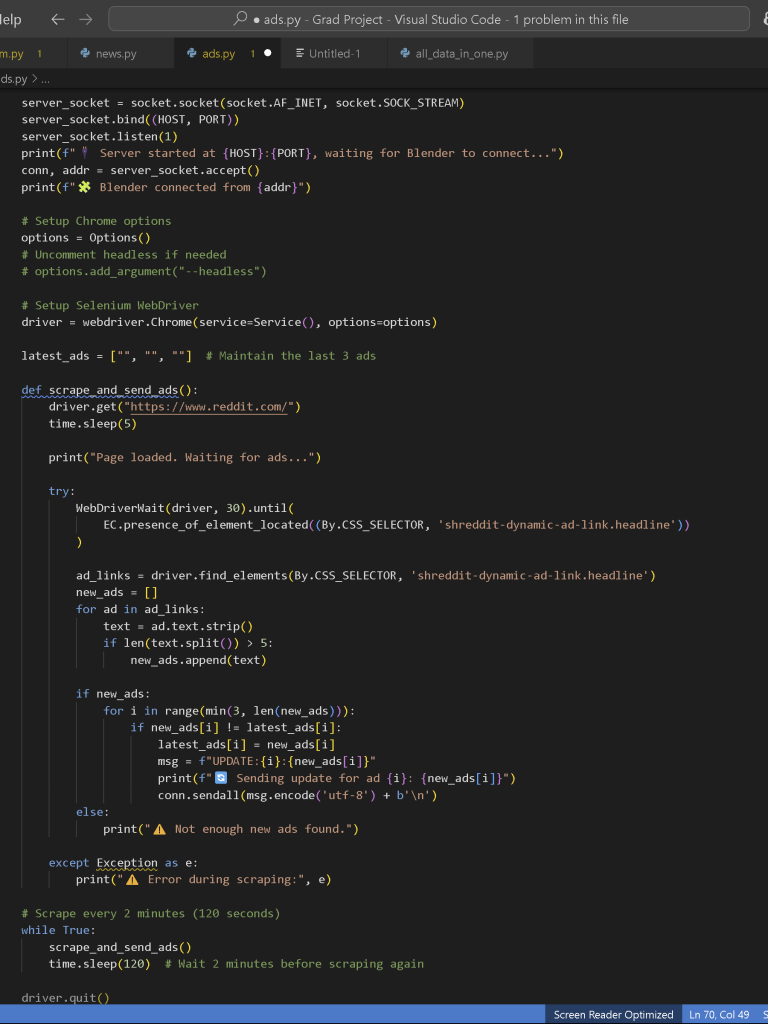

The next thing was to make sure only the right things showed up, those titles which could realistically be on a tube advert. So I did the following -> Narrow it to only the headline content by using By.CSS_SELECTOR, 'shreddit-dynamic-ad-link.headline', nd skip one word labels like “Shop”, “Listen”, etc by using if len(text.split()) > 5.

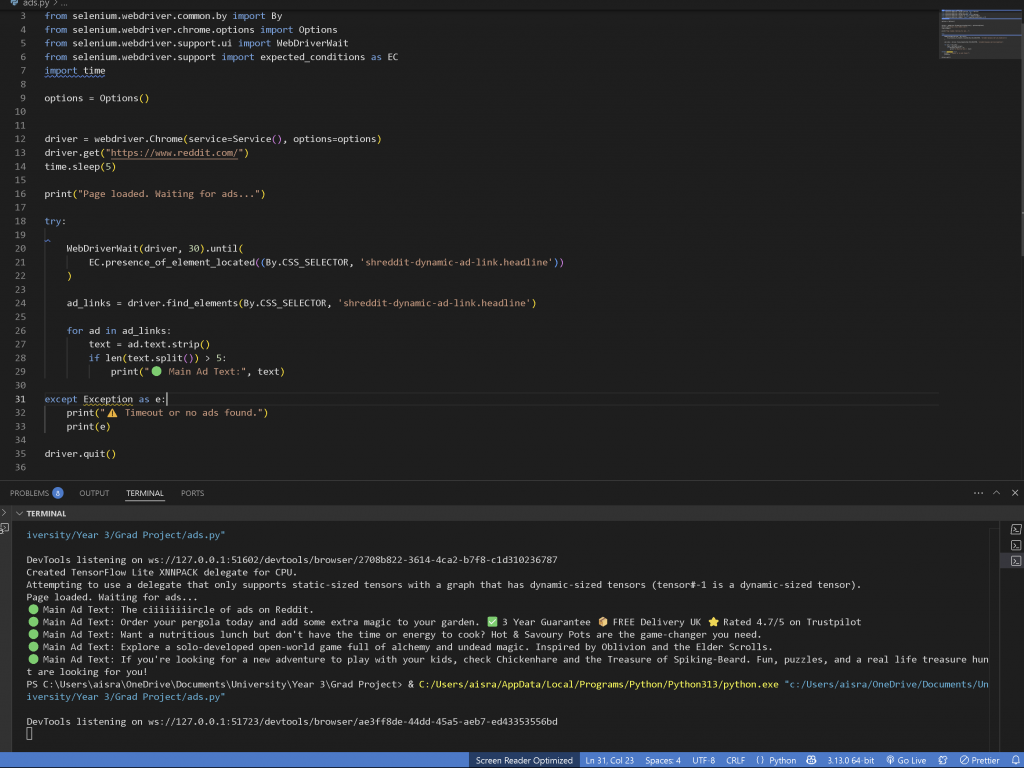

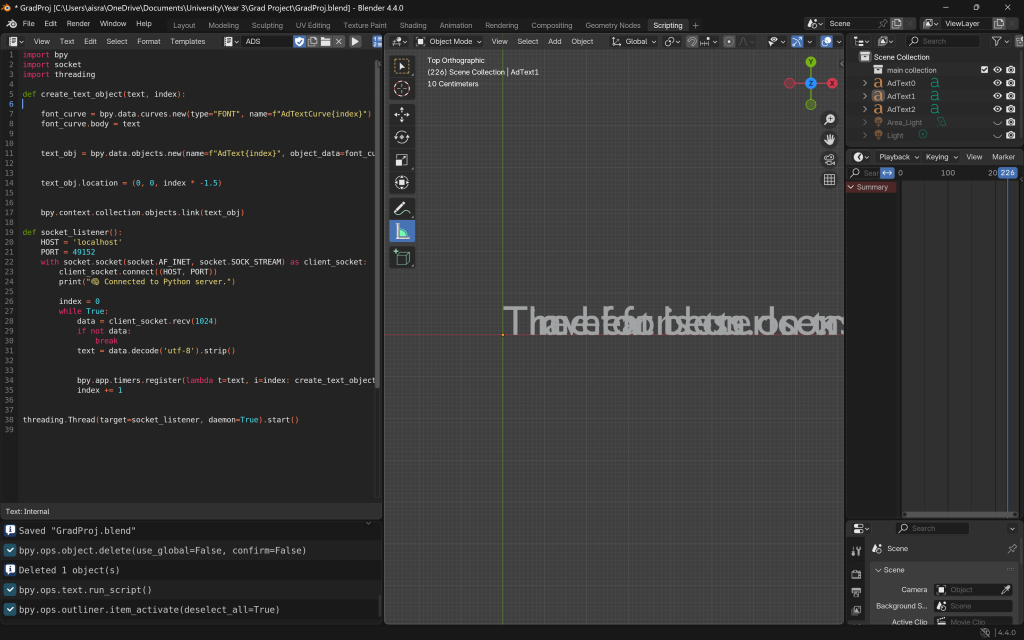

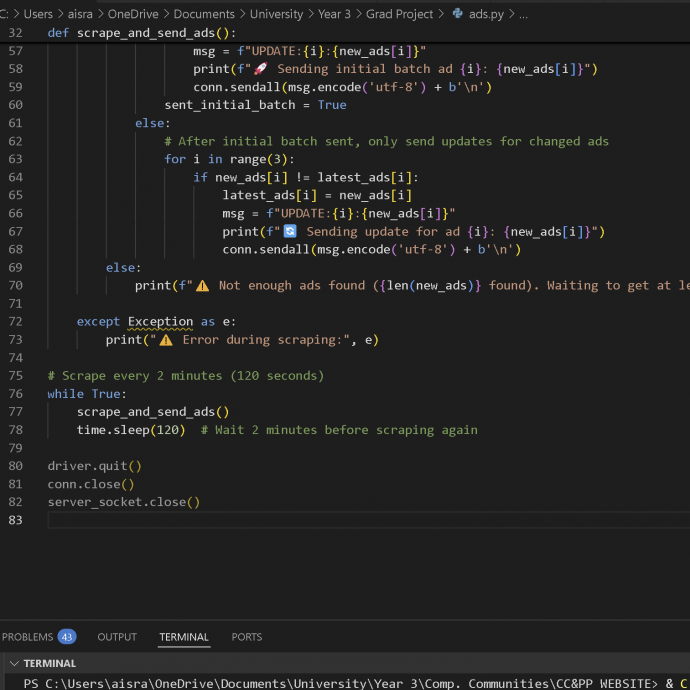

Then, the titles were successfully printed out on the page. I converted this into a server-side on Web Sockets and started working on the client (Blender) side.

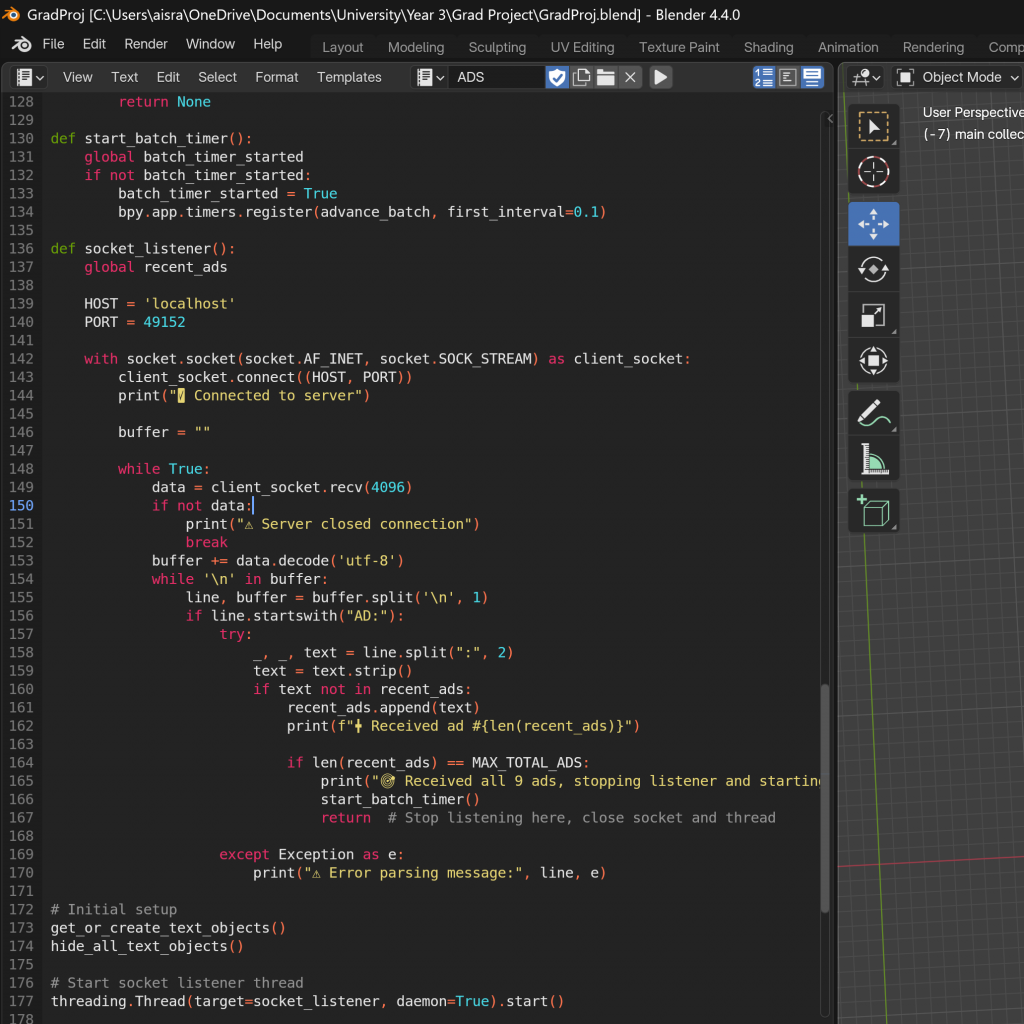

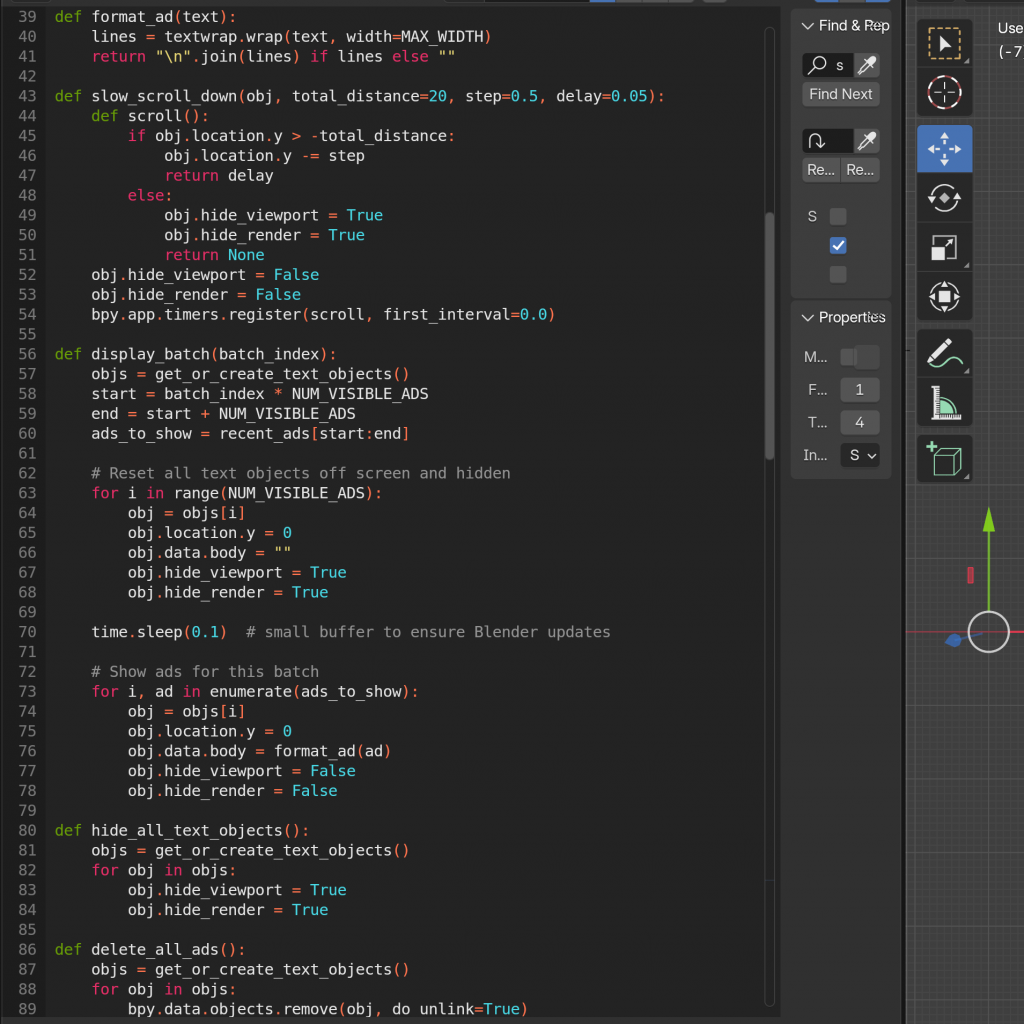

I made it so that it receives the ad titles from the server and creates the 2D text objects in Blender’s scene for each received ad title and switches them once new ads were scraped and sent to Blender. It did not show up or work for a long time, and I went through may small adjustments and trials to get it to show up on the plane. Since bpy.app.timers.register is an API call meant for the main thread, and I was using threading, I thought this might cause the issues. I thought this could be resolved by using the timer as it’s designed to run in the main thread, but this did not work.

I tried more things like printing data to Blender’s console but it was not even receiving the data properly, and I got the error message ConnectionResetError: [WinError 10054] An existing connection was forcibly closed by the remote host. Chatgpt said this meant that the server (in this case, my scraping script) closed the connection while the Blender client was still trying to read data from it, and that this usually happens due to network issues or the server process terminating unexpectedly.

So the following was done ->

- Retrying every 2 secs if the server fails to accept a connection

- If sending data fails (e.g., due to the client disconnecting), the server will close the connection and break out of the loop.

- On the Blender side, if the connection is reset or there’s any socket error, the client will print the error and try to reconnect after 5 seconds.

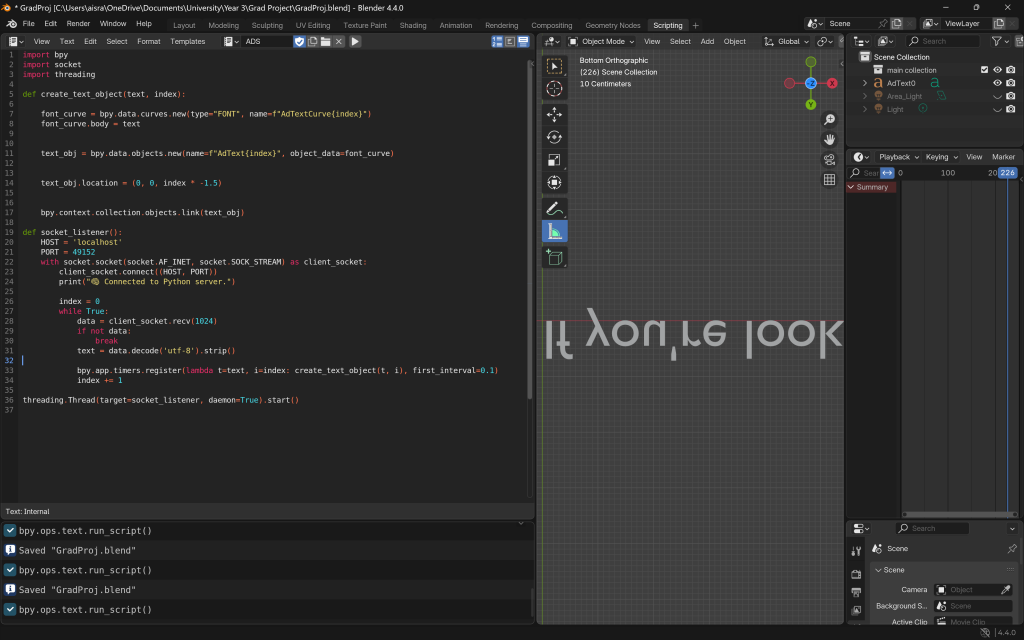

It did not work on the day; I am not sure what exactly changed, but the next day it started working. It was initially visualised as it came and upside down, but fixing the positioning was simple. I next had to sort out the spacing, timing, and implement further logic which would make three ads appear (to fill the three ad billboards in the animation), and not any less so there was no empty billboard, and make sure as new ads were found they would be replacing one of the old ads, so the constant renewal of media is portrayed.

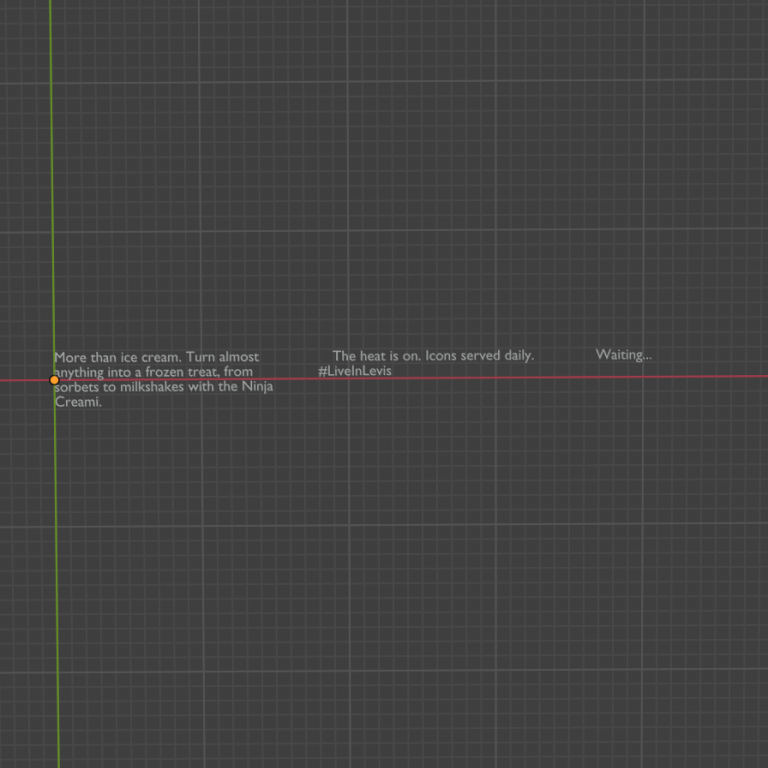

One problem I realised while scraping was that the scraper found the ads quite slowly, and often only one ad would be visualised as a time, and then more and more as they were scraped. This was a problem as I needed three ads to be always there at the same time so the billboards were not empty. Another problem was that new ads kept coming in and obscuring the old ones. I tried to make it so that when a new ad came the previous one was deleted but this exacerbated the issue of the lack of ads coming in at a time.

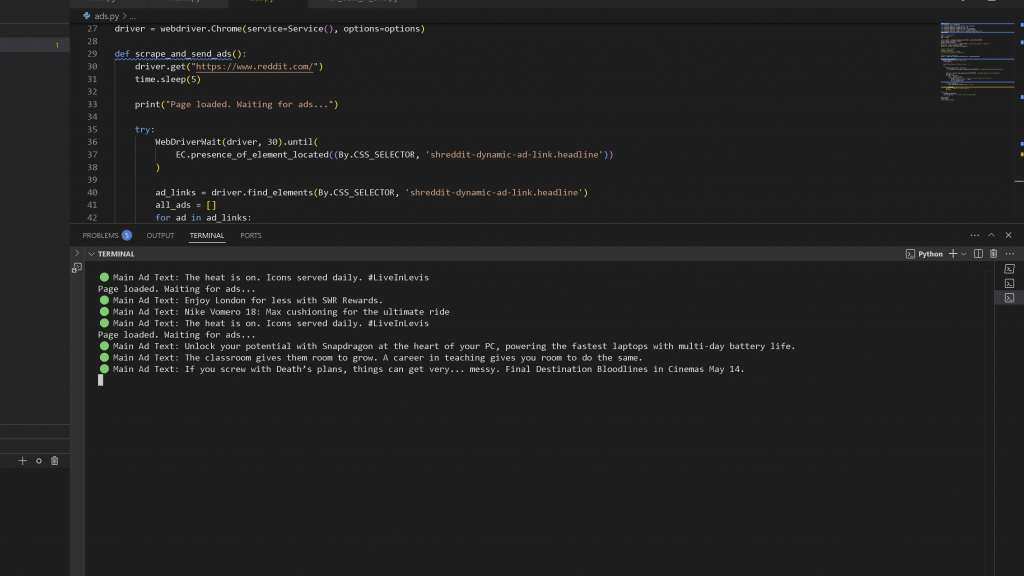

So then, I added some code which would continuously scrape ads and maintain a rolling list of the latest three ads, while the Blender script dynamically updates the corresponding three text objects without creating or deleting new ones every time. So, VSCode side only scraped and sent updates when the content changed, Blender keeps 3 static objects and simply updates their text when needed.

I added some logic which would ensure that the scraper would wait until three ads were found, and then only send them to Blender to be visualised. I thought this would be better in terms of lagging less.

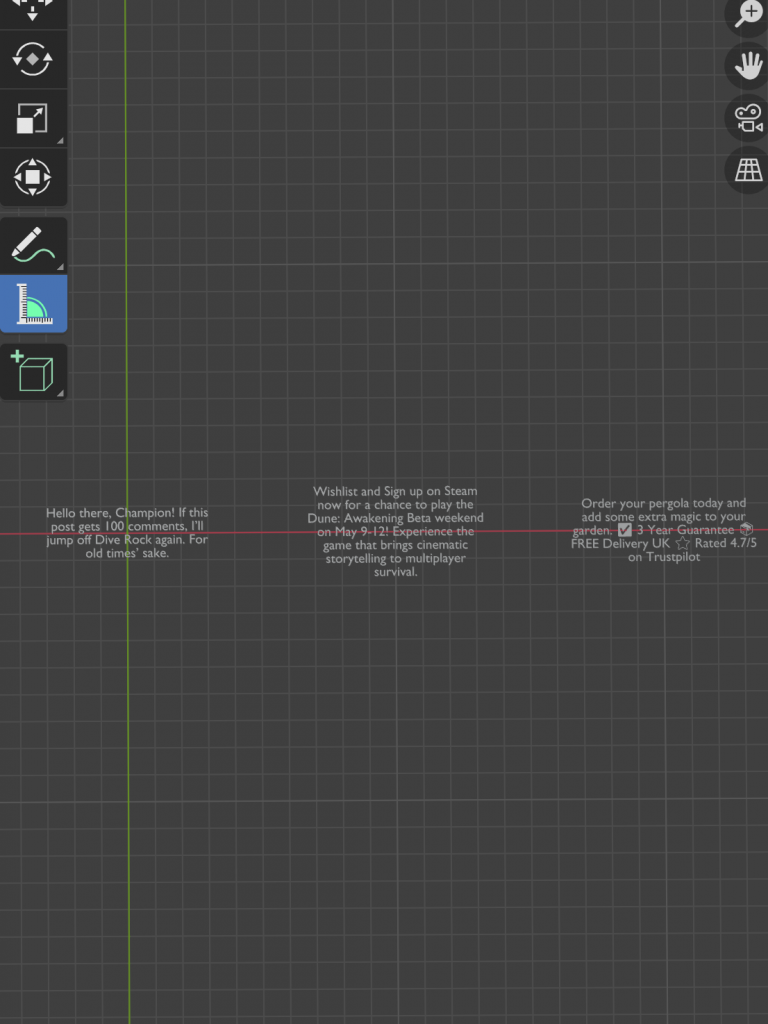

Then, this started working on Blender, where three ads were visualised at a time. They obscured each other, but the spacing was fixed quite easily. The following ads would update the text objects as they arrived.

This was then effectively displaying three ads, as they were sent at once once scraped, the updating them as new ads were obtained. There were sometimes very slow to change, or overly quick, but I just attributed this wo the nature of the site and scraping.

I then went ahead added the animation logic. What I wanted was that three ads would show, then the next three scraped, then the next three. Initially, I wanted the first batch to all scroll down to follow the character, but this was not viable due to the way it was drawn, and horizontally spaced apart. So I made it so that only the leftmost ad of the first batch scrolls down after a few seconds of being static, and then it switches to the subsequent batches.

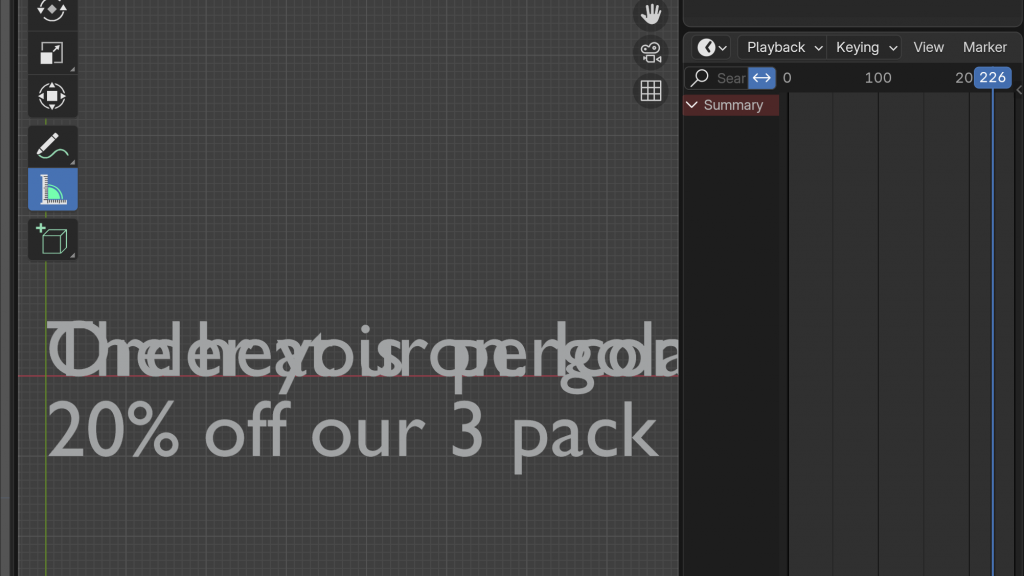

While I initially aimed to use a single object to contain all the ads—similar to how I handled previous media types—for smoother animation, that approach didn’t quite fit here. In this case, only one ad needed to scroll down, while the other two simply switched to the next set from the batch of nine ads that had been sent. This mismatch in animation behavior made the “one container” setup impractical, prompting me to handle the ads individually instead. As seen below, making it one object made it extremely difficult to align and get the spacing of the ads correct, which was important when it came to overlaying it over the animation. The difference can be seen above; as one object, versus three, when animation is implemented.

It is likely achievable, but was taking up too much of my time to figure out, and I had to quickly move on to manage my time properly.

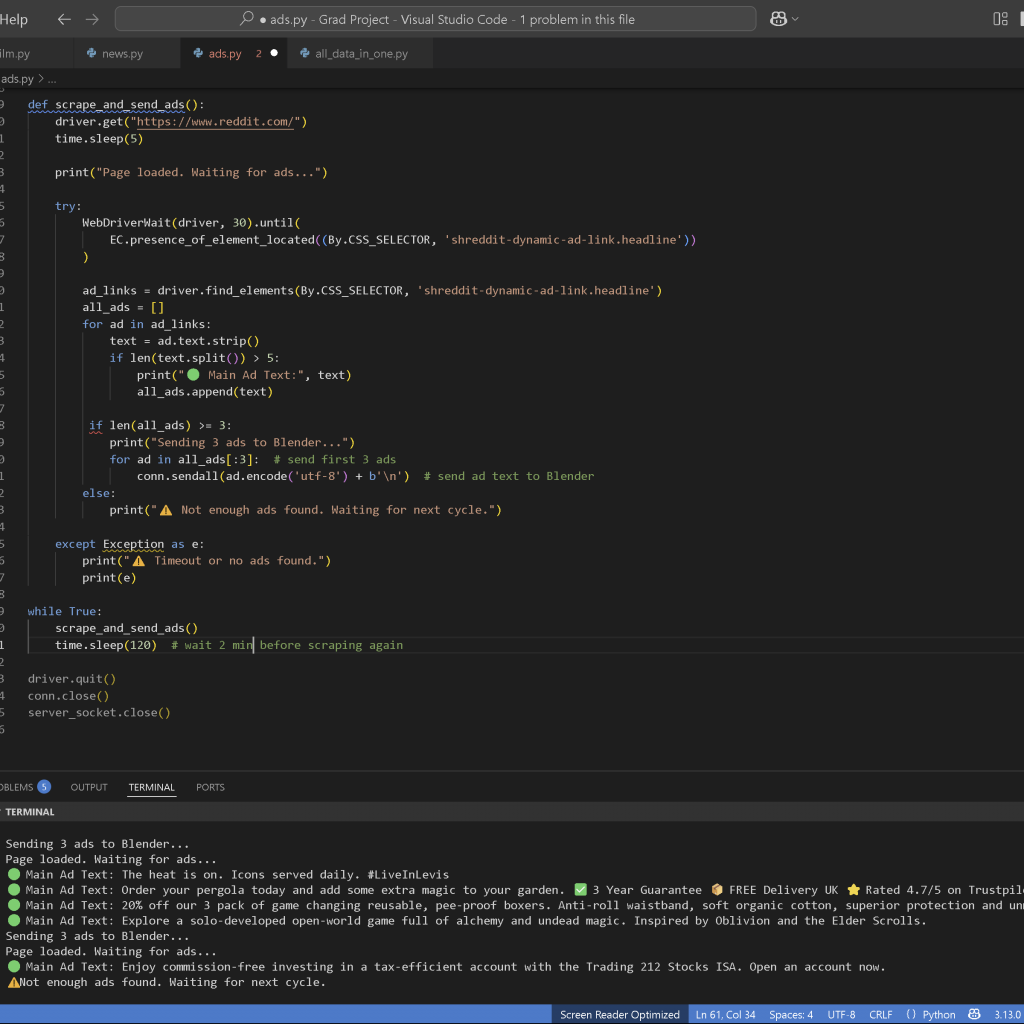

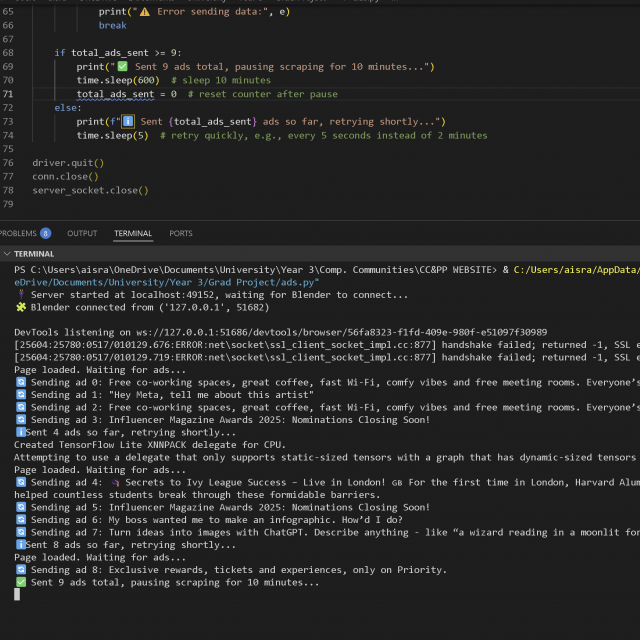

As I faced glitches after adding this animation logic, I added code to limit scraping to occasional intervals, allowing it to collect data and re-scrape after some time. This was t help prevent excessive scraping that could cause crashes or slowdowns on either end. I updated the client side logic on Blender to reflect this change, and experimented a bit. It was still shown live on Blender as the data was found, but it was not scraping as frequently.

At this point, I figured that the previous problem I faced, ads updating too slowly sometimes, was due to them actually updating but a result of the same ad being scraped. I then added this logic – sending data only when there is an update or change found. I wanted each ad to be unique – the script currently re-scraped the same ads and sent them again. So, I made sure it would only finish after scraping 9 unique ads, so they could be visualised in three batches in sets of 3 . While this took some time it was worth it as it made the visualisation a bit more engaging.

Once this was done, and after a gap in time during which I explored scraping news data and being confronted with the reality of what I could complete before submission, I returned to this with a fresh perspective. I realised that the overall process needed simplification. So, I made several adjustments to streamline everything—from how data was scraped to how it was visualised—removing unnecessary complexity wherever possible.

I decided to remove the looping mechanism and the 10-minute sleep–re-scrape–send–update cycle, as it proved unviable to complete within the project scope. Instead, I opted for a simpler, one-time setup: the server scrapes all 9 ads in one go and sends them to Blender upon connection. Once Blender receives the full batch, it closes the socket and stops listening. Blender then proceeds to visualize the ads procedurally—displaying one at a time. One ad scrolls, while the others simply switch in sequence. The core animation logic remained largely unchanged, except that instead of updating dynamically as new data arrived, Blender now already has all 9 ads and iterates through them for a set number of seconds each. After each ad is shown, the object is deleted, making way for the next.